New Robot Learns Object Arrangement Preferences Without User Input

Oct 05, 2023 —

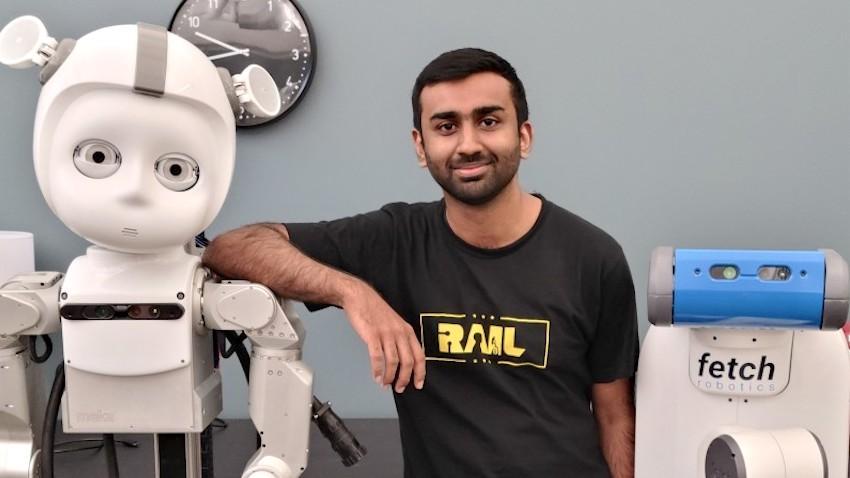

Kartik Ramachandruni (via LinkedIn) is a robotics Ph.D. student advised by School of Interactive Computing Associate Professor Sonia Chernova.

Kartik Ramachandruni knew he would need to find a unique approach to a populated research field.

With a handful of students and researchers at Georgia Tech looking to make breakthroughs in home robotics and object rearrangement, Ramachandruni searched for what others had overlooked.

“To an extent it was challenging, but it was also an opportunity to look at what people are already doing and to get more familiar with the literature,” said Ramachandruni, a Ph.D. student in Robotics. “(Associate) Professor (Sonia) Chernova helped me in deciding how to zone in on the problem and choose a unique perspective.”

Ramachandruni started exploring how a home robot might organize objects according to user preferences in a pantry or refrigerator without prior instructions required by existing frameworks.

His persistence paid off. The 2023 IEEE International Confrence on Robots and Systems (IROS) accepted Ramachandruni’s paper on a novel framework for a context-aware object rearrangement robot.

“Our goal is to build assistive robots that can perform these organizational tasks,” Ramachandruni said. “We want these assistive robots to model the user preferences for a better user experience. We don’t want the robot to come into someone’s home and be unaware of these preferences, rearrange their home in a different way, and cause the users to be distressed. At the same time, we don’t want to burden the user with explaining to the robot exactly how they want the robot to organize their home.”

Ramachandruni’s object rearrangement framework, Context-Aware Semantic Object Rearrangement (ConSOR), uses contextual clues from a pre-arranged environment within its environment to mimic how a person might arrange objects in their kitchen.

“If our ConSOR robot rearranged your fridge, it would first observe where objects are already placed to understand how you prefer to organize your fridge,” he said. “The robot then places new objects in a way that does not disrupt your organizational style.”

The only prior knowledge the robot needs is how to recognize certain objects such as a milk carton or a box of cereal. Ramachandruni said he pretrained the model on language datasets that map out objects hierarchically.

“The semantic knowledge database we use for training is a hierarchy of words similar to what you would see on a website such as Walmart, where objects are organized by shopping category,” he said. “We incorporate this commonsense knowledge about object categories to improve organizational performance.

“Embedding commonsense knowledge also means our robot can rearrange objects it hasn’t been trained on. Maybe it’s never seen a soft drink, but it generally knows what beverages are because it’s trained on another object that belongs to the beverage category.”

Ramachandruni tested ConSOR against two model training baselines. One used a score-based approach that learns how specific users group objects in an environment. It then uses the scores to organize objects for users. The other baseline used the GPT-3 large language model prompted with minimal demonstrations and without fine-tuning to determine the placement of new objects. ConSOR outperformed both baselines.

“GPT-3 was a baseline we were comparing against to see whether this huge body of common-sense knowledge can be used directly without any sort of frame,” Ramachandruni said. “The appeal of LLMs is you don’t need too much data; you just need a small data set to prompt it and give it an idea. We found the LLM did not have the correct inductive bias to correctly reason between different objects to perform this task.”

Ramachandruni said he anticipates there will be scenarios where user input is required. His future work on the project will include minimizing the effort required by the user in those scenarios to tell the robot its preferences.

“There are probably scenarios where it’s just easier to ask the user,” he said. “Let’s say the robot has multiple ideas of how to organize the home, and it’s having trouble deciding between them. Sometimes it’s just easier to ask the user to choose between the options. That would be a human-robot interaction addition to this framework.”

IROS is taking place this week in Detroit.

School of Interactive Computing Associate Professor Sonia Chernova lecturing in a classroom. (Photo by Terence Rushin/College of Computing)

Nathan Deen, Communications Officer I

School of Interactive Computing

nathan.deen@cc.gatech.edu