Research Paves Way for Home Robot that Can Tidy a House on Its Own

Oct 19, 2022 — Atlanta, GA

Struggling with keeping your home clean and organized? You may soon have an extra set of hands to help around the house.

Imagine a home robot that can keep a house tidy without being given any commands from its owner. Well, the next step in home robotics is here — at least virtually.

A group of doctoral and master’s students from Georgia Tech's School of Interactive Computing, in collaboration with researchers from the University of Toronto, believe they have created the benchmark for a home robot that can keep an entire house tidy.

In their paper, Housekeep: Tidying Virtual Households Using Commonsense Reasoning, Georgia Tech doctoral candidates Harsh Agrawal and Andrew Szot, master’s students Arun Ramachandran and Sriram Yenamandra, and Yash Kant, a former research visitor at Georgia Tech who is now a doctoral candidate at Toronto, set out to prove an embodied artificial intelligence (AI) could conduct simple housekeeping tasks without explicit instructions.

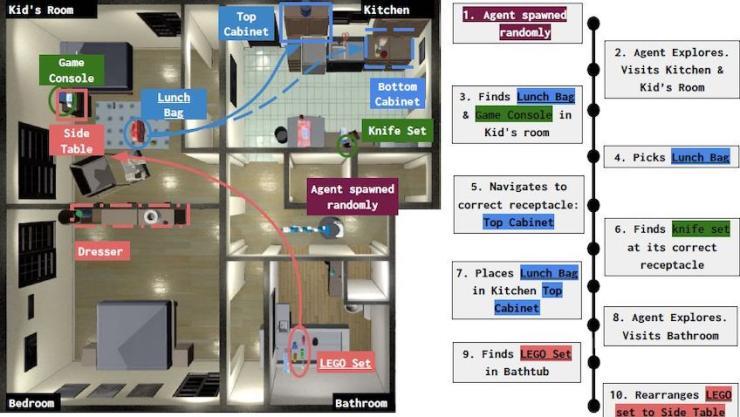

Using advanced natural language processing machine learning techniques, the students have successfully simulated the robot exploring a virtual household, identifying misplaced items, and putting them in their correct place.

Kant said most robots in embodied AI are given specific instructions for different functions, but the students wanted to be sure the robot could achieve task completion without instructions in simulation before moving on to real-world testing.

“In the actual world, things are difficult,” Kant said. “Training robots in the real world — they move around slowly; they will bump into things and people. So, we do it in simulation because you can run things at a faster speed, and you can have multiple virtual robots running.”

Dhruv Batra, an associate professor in the School of Interactive Computing and a research scientist with Meta AI, and Igor Gilitschenski, an assistant professor of mathematical and computational sciences at Toronto, served as advisors on the paper, which was accepted to the 2022 European Conference on Computer Vision, Oct. 23-27 in Tel Aviv, Israel.

[FULL COVERAGE: Georgia Tech at ECCV 2022]

In the virtual simulation, the robot spawned in a random section of the house and immediately began looking for misplaced objects. It correctly identified a misplaced lunchbox in a kid’s bedroom and moved it to the kitchen. It also located some toys left in the bathroom and moved them to the kid’s bedroom.

Agrawal said the goal of the project from the beginning was to have the robot mimic commonsense reasoning that any human would have in tidying a house. Through surveys, the team collected rearrangement preferences for 1,799 objects in 585 placements in 105 rooms.

“We collected human preferences data,” Agrawal said. “We asked people where they like to keep certain objects, and we wanted robots to have a similar notion of cleanliness in a tidy home.

“You don’t provide instructions when you ask the kids to clean up the house. It’s commonsense. You know certain things go in certain places. You know Lego blocks don’t belong in the bathroom. We thought it’d be cool if it could clean up the house without specifying instructions. As humans, we can do a bunch of these tasks without being given specific instructions.”

Creating the simulation had several challenges. These included getting the robot to use reason about the correct placement of new objects, getting the robot to adapt to new environments, and getting it to work through choices when there are multiple correct locations a misplaced object could go.

Szot said what attracted him to the project was the idea of creating a robot that didn’t need to be told where to put something, whereas in his previous work, that’s exactly what he had to do.

“If you wanted it do something like clean up the house, you would have to tell it, ‘Hey, robot, move that object to there,’” Szot said. “It’s very tedious to specify that. We took the first step of saying let’s give the robot some commonsense reasoning. It might not be specific to a person; it might just be capturing more generally what people think, but it captures a lot of important situations. It’s able to handle most of those situations in which people agree the object belongs there or the object doesn’t belong there.”

Using text from the internet, the team informed the AI that drives the robot by fine-tuning a large language model based on human preferences.

“The way we approached solving this problem is we took this external source of knowledge from text on the internet and these language tasks, and so from natural language processing we took that information and used it to give our robot some idea of this common sense,” Szot said. “It wasn’t purely from the house it learned how to do these things. From articles or texts online, it was able to distill this commonsense reasoning ability and then apply it.”

Kant said using language models allows the AI to distinguish between objects and whether those objects should go together. He added that he thinks that the language model used to train the AI can be fine-tuned by extracting content from web articles related to housekeeping.

“Language models have shown very promising results in trying to extract semantics, like whether two things — say an apple and fruit basket — go together in a household,” Kant said.

The team is just at the tip of the iceberg, and the virtual simulation serves only as a proof of concept. It’s a long-term project that will continue to explore new possibilities, which include creating a robot that can tidy a household according to specific user preferences.

But the successful use of NLP methods to inform a novel AI could break new barriers in the creation of new systems in which organization is the focus.

“It’s a benchmark for the rest of the community to use,” Szot said. “Hopefully this is something for people to gather behind to focus on this very realistic task setting of cleaning the house. We showed that you can create these embodied agents that can use this external knowledge and learn commonsense and use it in embodied robotic settings.”

“I think the data that we collected is pretty significant in the sense that we now have a few hundred annotations for where each object should go in houses and where they’re likely to be found in untidy houses, and I think that information can guide a lot of systems,” Agrawal added. “I feel like we are starting to now see people saying all these annotations can be used for building their own systems and benchmarks.”

Nathan Deen, Communications Officer