Aug. 27, 2024

As artificial intelligence continues to transform countless areas of society, the Georgia Tech Research Institute (GTRI) is applying it to another critical area: disaster management.

GTRI is leading the development of an integrated artificial intelligence response hub for Southwest Georgia to help communities streamline disaster management and logistics. The hub aims to enhance resilience and response efficiency of these communities, potentially saving lives and reducing economic losses. GTRI is collaborating in this effort with the Southwest Georgia Regional Commission (SWGRC), a regional planning agency that serves 14 counties and 44 cities in Southwest Georgia. The SWGRC focuses on ecosystem building in the areas of manufacturing in food production, manufacturing start-ups, supply chain logistics and workforce development.

“This will be a centralized platform that key stakeholders in Southwest Georgia can use to manage various disruption scenarios,” said GTRI Senior Research Engineer Francisco Valdes, who is leading this project.

This project is one of several initiatives undertaken by Georgia Artificial Intelligence in Manufacturing (Georgia AIM), a $65 million federal grant awarded to Georgia Tech and a coalition of partners across the state, including the Georgia Tech Manufacturing Institute.

Read the full story on the GTRI website >>>

News Contact

Writer: Anna Akins

Photos: Sean McNeil

Media Inquiries: michelle.gowdy@gtri.gatech.edu

Aug. 27, 2024

Professor Horacio Ahuett Garza from the Tecnológico de Monterrey recently settled into the Georgia Tech Manufacturing Institute (GTMI) community as a visiting scholar. Ahuett is a leading faculty member in the Mechanical Engineering and Advanced Materials Department at Tecnológico de Monterrey located in Monterrey, Mexico. He earned his mechanical engineering masters and doctorate from Ohio State University more than 25 years ago. Ahuett will be interacting with Georgia Tech faculty to explore research areas in Smart Manufacturing and Industry 4.0.

“My home university has 30 campuses across Mexico with the main campus being in Monterrey—where I was born. I’ve known professor Tom Kurfess, executive director of GTMI, for more than 20 years. He has a faculty appointment at Tecnológico de Monterrey similar to a distinguished professor due to an agreement with Georgia Tech,” said Ahuett. “We’re both involved with advanced manufacturing but in different countries with similar processes. However, the facilities at Georgia Tech are far more advanced, such as the Advanced Manufacturing Pilot Facility (AMPF) operated by GTMI. Some of our graduate students periodically come to participate in research at Georgia Tech.”

Ahuett, who has ample manufacturing research experience, was extended an invitation to visit Georgia Tech this spring. He is using this visit as a sabbatical to further his understandings and learn more about new advanced manufacturing topic areas.

He recently attended The Hershey Company lecture at GTMI presented by Will Bonifant, vice president of the US and Canada supply chain, and Chris Myers, vice president of engineering at Hershey. The topic was modernizing a century-old, iconic snacks company leveraging Industry 4.0 digital and technology solutions.

“Today, Hershey provided a good understanding of smart manufacturing and how Hershey uses its fast access to data to make quick decisions that are implemented inside the factory and its processes,” said Ahuett. “They deployed smart manufacturing processes to use fewer resources, reduce waste, yet make factory equipment changes in a timely manner and safe manner to deliver targeted product quantities based on customer demand.”

Ahuett indicated that the proliferation of sensor technology and corresponding data can be used to benefit manufacturing by reducing waste, saving energy, and generally making companies more agile with better use of resources in factory settings. One example would be understanding performance parameters for tooling where you generally know the life cycle of a cutting tool and are able to measure the tool’s degradation in real time so that you can make plans to replace the tool at the best time to minimize your downtime of that tool versus waiting for it to break unexpectedly and shut down the process.

His strongest research interest during his GTMI visit is the topic of digital twins.

“The topic of digital twins is not new -- it has been around for at least 40 years. A good example is that during the Apollo moon missions NASA had digital twins. They had instruments on earth that replicated the instruments sent on the moon missions so they could simulate actions [using data] on earth that would occur on the actual spaceships,” said Ahuett.

“The concept of digital twins generates confusion. The first impulse is to think of a digital image as the twin of a physical entity. In principle, the digital twin simulates the behavior of the physical twin to model and make predictions. Not all processes can be modelled in real time, but some can which provides beneficial information in a timely manner given how fast computer processors are today. Today, we have more and better tools that use data to give us greater insights.”

Ahuett will be working with robots and co-robots in collaboration with Kyle Saleeby, research engineer at GTMI, to help automate accurate measuring during a manufacturing process. A piece of their research project will be building models so a part’s characteristic data can be tracked and stored in a digital twin that represents a specific instance of each part manufactured and which will also include external data associated with the manufacturing of the part.

“I’m hoping my sabbatical at Georgia Tech will help me develop new competencies, new skills, and new knowledge on critical topics. I want to move research further in some areas and part of being here is to figure out some things that I didn’t know before with the help of GTMI,” said Ahuett.

News Contact

Walter Rich

Aug. 27, 2024

The Novelis Innovation Hub at Georgia Tech organized a "AI Applications Workshop" on June 8, 2023. This hybrid event took place on the Georgia Tech campus and brought together a diverse group of participants, including over 50 scientists and engineers from Novelis, as well as faculty members, scholars, and administrators from Georgia Tech. The main purpose of the workshop was to identify synergies between the areas of interest and needs within Novelis and the research and capabilities of Georgia Tech faculty in the fields of artificial intelligence (AI) and machine learning (ML). The aim was to explore how the two organizations could collaborate effectively in leveraging AI/ML technology. Through presentations and discussions, potential areas of interest aligned with Novelis's objectives were identified, focusing on machine learning for system modeling, diagnostics, and prognostics in manufacturing systems, materials informatics, digital engineering including digital twins, model-based system engineering, and the integration of AI/ML. The open discussion session focused on collaboration opportunities to accelerate discovery, development, and optimization of materials and manufacturing processes of relevance to Novelis. The desired outcomes of the event were to define follow-up actions, specifically focusing on developing collaborative proposals and statements of work (SOWs) between Novelis and Georgia Tech. By facilitating this collaborative environment, the workshop aimed to foster meaningful partnerships and to enable the exchange of industry needs and academic research expertise, ultimately paving the way for future collaborations and advancements in AI applications. More photos from the event >>.

News Contact

Walter Rich

Aug. 27, 2024

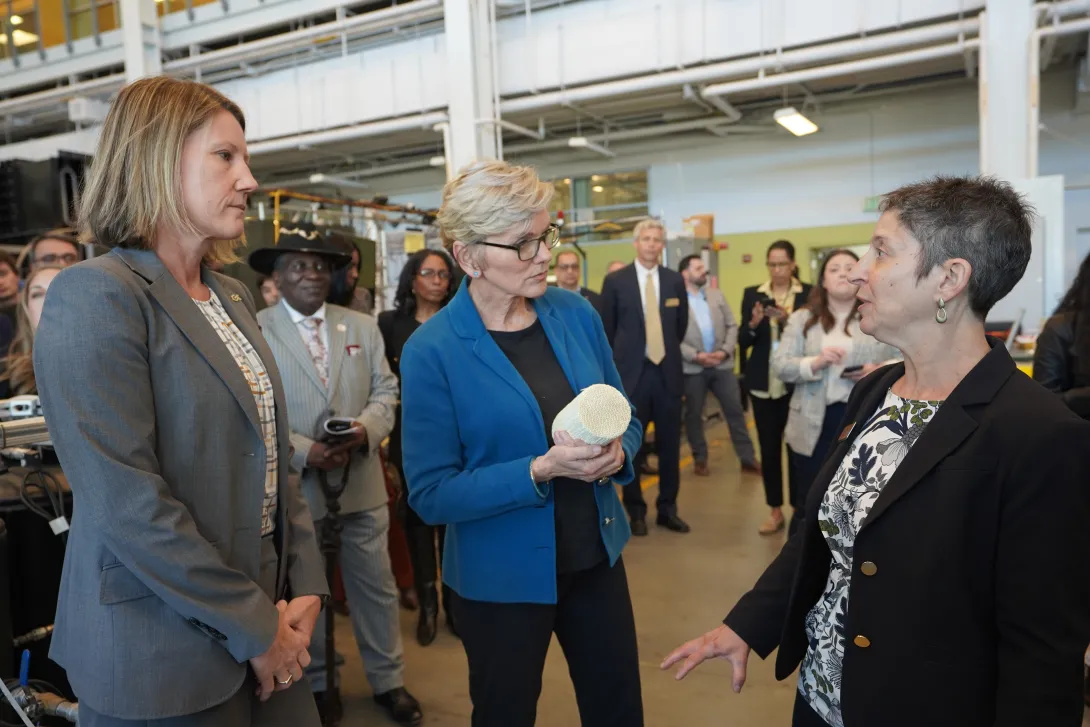

From commercialization to community engagement to partnerships with national labs and corporations, Georgia Tech leads in the development and use of direct air capture technologies.

News Contact

Shelley Wunder-Smith

shelley.wunder-smith@research.gatech.edu

Aug. 21, 2024

- Written by Benjamin Wright -

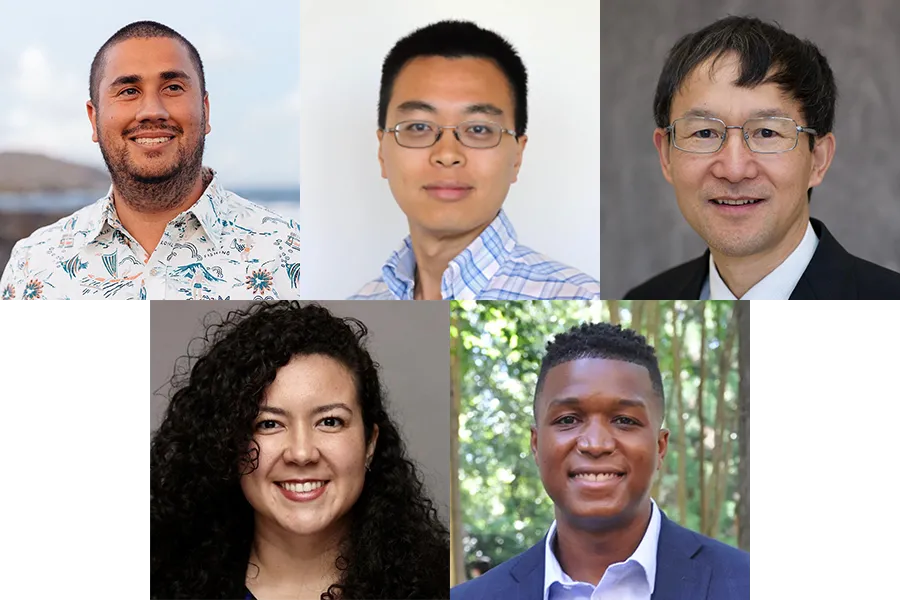

As Georgia Tech establishes itself as a national leader in AI research and education, some researchers on campus are putting AI to work to help meet sustainability goals in a range of areas including climate change adaptation and mitigation, urban farming, food distribution, and life cycle assessments while also focusing on ways to make sure AI is used ethically.

Josiah Hester, interim associate director for Community-Engaged Research in the Brook Byers Institute for Sustainable Systems (BBISS) and associate professor in the School of Interactive Computing, sees these projects as wins from both a research standpoint and for the local, national, and global communities they could affect.

“These faculty exemplify Georgia Tech's commitment to serving and partnering with communities in our research,” he says. “Sustainability is one of the most pressing issues of our time. AI gives us new tools to build more resilient communities, but the complexities and nuances in applying this emerging suite of technologies can only be solved by community members and researchers working closely together to bridge the gap. This approach to AI for sustainability strengthens the bonds between our university and our communities and makes lasting impacts due to community buy-in.”

Flood Monitoring and Carbon Storage

Peng Chen, assistant professor in the School of Computational Science and Engineering in the College of Computing, focuses on computational mathematics, data science, scientific machine learning, and parallel computing. Chen is combining these areas of expertise to develop algorithms to assist in practical applications such as flood monitoring and carbon dioxide capture and storage.

He is currently working on a National Science Foundation (NSF) project with colleagues in Georgia Tech’s School of City and Regional Planning and from the University of South Florida to develop flood models in the St. Petersburg, Florida area. As a low-lying state with more than 8,400 miles of coastline, Florida is one of the states most at risk from sea level rise and flooding caused by extreme weather events sparked by climate change.

Chen’s novel approach to flood monitoring takes existing high-resolution hydrological and hydrographical mapping and uses machine learning to incorporate real-time updates from social media users and existing traffic cameras to run rapid, low-cost simulations using deep neural networks. Current flood monitoring software is resource and time-intensive. Chen’s goal is to produce live modeling that can be used to warn residents and allocate emergency response resources as conditions change. That information would be available to the general public through a portal his team is working on.

“This project focuses on one particular community in Florida,” Chen says, “but we hope this methodology will be transferable to other locations and situations affected by climate change.”

In addition to the flood-monitoring project in Florida, Chen and his colleagues are developing new methods to improve the reliability and cost-effectiveness of storing carbon dioxide in underground rock formations. The process is plagued with uncertainty about the porosity of the bedrock, the optimal distribution of monitoring wells, and the rate at which carbon dioxide can be injected without over-pressurizing the bedrock, leading to collapse. The new simulations are fast, inexpensive, and minimize the risk of failure, which also decreases the cost of construction.

“Traditional high-fidelity simulation using supercomputers takes hours and lots of resources,” says Chen. “Now we can run these simulations in under one minute using AI models without sacrificing accuracy. Even when you factor in AI training costs, this is a huge savings in time and financial resources.”

Flood monitoring and carbon capture are passion projects for Chen, who sees an opportunity to use artificial intelligence to increase the pace and decrease the cost of problem-solving.

“I’m very excited about the possibility of solving grand challenges in the sustainability area with AI and machine learning models,” he says. “Engineering problems are full of uncertainty, but by using this technology, we can characterize the uncertainty in new ways and propagate it throughout our predictions to optimize designs and maximize performance.”

Urban Farming and Optimization

Yongsheng Chen works at the intersection of food, energy, and water. As the Bonnie W. and Charles W. Moorman Professor in the School of Civil and Environmental Engineering and director of the Nutrients, Energy, and Water Center for Agriculture Technology, Chen is focused on making urban agriculture technologically feasible, financially viable, and, most importantly, sustainable. To do that he’s leveraging AI to speed up the design process and optimize farming and harvesting operations.

Chen’s closed-loop hydroponic system uses anaerobically treated wastewater for fertilization and irrigation by extracting and repurposing nutrients as fertilizer before filtering the water through polymeric membranes with nano-scale pores. Advancing filtration and purification processes depends on finding the right membrane materials to selectively separate contaminants, including antibiotics and per- and polyfluoroalkyl substances (PFAS). Chen and his team are using AI and machine learning to guide membrane material selection and fabrication to make contaminant separation as efficient as possible. Similarly, AI and machine learning are assisting in developing carbon capture materials such as ionic liquids that can retain carbon dioxide generated during wastewater treatment and redirect it to hydroponics systems, boosting food productivity.

“A fundamental angle of our research is that we do not see municipal wastewater as waste,” explains Chen. “It is a resource we can treat and recover components from to supply irrigation, fertilizer, and biogas, all while reducing the amount of energy used in conventional wastewater treatment methods.”

In addition to aiding in materials development, which reduces design time and production costs, Chen is using machine learning to optimize the growing cycle of produce, maximizing nutritional value. His USDA-funded vertical farm uses autonomous robots to measure critical cultivation parameters and take pictures without destroying plants. This data helps determine optimum environmental conditions, fertilizer supply, and harvest timing, resulting in a faster-growing, optimally nutritious plant with less fertilizer waste and lower emissions.

Chen’s work has received considerable federal funding. As the Urban Resilience and Sustainability Thrust Leader within the NSF-funded AI Institute for Advances in Optimization (AI4OPT), he has received additional funding to foster international collaboration in digital agriculture with colleagues across the United States and in Japan, Australia, and India.

Optimizing Food Distribution

At the other end of the agricultural spectrum is postdoc Rosemarie Santa González in the H. Milton Stewart School of Industrial and Systems Engineering, who is conducting her research under the supervision of Professor Chelsea White and Professor Pascal Van Hentenryck, the director of Georgia Tech’s AI Hub as well as the director of AI4OPT.

Santa González is working with the Wisconsin Food Hub Cooperative to help traditional farmers get their products into the hands of consumers as efficiently as possible to reduce hunger and food waste. Preventing food waste is a priority for both the EPA and USDA. Current estimates are that 30 to 40% of the food produced in the United States ends up in landfills, which is a waste of resources on both the production end in the form of land, water, and chemical use, as well as a waste of resources when it comes to disposing of it, not to mention the impact of the greenhouses gases when wasted food decays.

To tackle this problem, Santa González and the Wisconsin Food Hub are helping small-scale farmers access refrigeration facilities and distribution chains. As part of her research, she is helping to develop AI tools that can optimize the logistics of the small-scale farmer supply chain while also making local consumers in underserved areas aware of what’s available so food doesn’t end up in landfills.

“This solution has to be accessible,” she says. “Not just in the sense that the food is accessible, but that the tools we are providing to them are accessible. The end users have to understand the tools and be able to use them. It has to be sustainable as a resource.”

Making AI accessible to people in the community is a core goal of the NSF’s AI Institute for Intelligent Cyberinfrastructure with Computational Learning in the Environment (ICICLE), one of the partners involved with the project.

“A large segment of the population we are working with, which includes historically marginalized communities, has a negative reaction to AI. They think of machines taking over, or data being stolen. Our goal is to democratize AI in these decision-support tools as we work toward the UN Sustainable Development Goal of Zero Hunger. There is so much power in these tools to solve complex problems that have very real results. More people will be fed and less food will spoil before it gets to people’s homes.”

Santa González hopes the tools they are building can be packaged and customized for food co-ops everywhere.

AI and Ethics

Like Santa González, Joe Bozeman III is also focused on the ethical and sustainable deployment of AI and machine learning, especially among marginalized communities. The assistant professor in the School of Civil and Environmental Engineering is an industrial ecologist committed to fostering ethical climate change adaptation and mitigation strategies. His SEEEL Lab works to make sure researchers understand the consequences of decisions before they move from academic concepts to policy decisions, particularly those that rely on data sets involving people and communities.

“With the administration of big data, there is a human tendency to assume that more data means everything is being captured, but that's not necessarily true,” he cautions. “More data could mean we're just capturing more of the data that already exists, while new research shows that we’re not including information from marginalized communities that have historically not been brought into the decision-making process. That includes underrepresented minorities, rural populations, people with disabilities, and neurodivergent people who may not interface with data collection tools.”

Bozeman is concerned that overlooking marginalized communities in data sets will result in decisions that at best ignore them and at worst cause them direct harm.

“Our lab doesn't wait for the negative harms to occur before we start talking about them,” explains Bozeman, who holds a courtesy appointment in the School of Public Policy. “Our lab forecasts what those harms will be so decision-makers and engineers can develop technologies that consider these things.”

He focuses on urbanization, the food-energy-water nexus, and the circular economy. He has found that much of the research in those areas is conducted in a vacuum without consideration for human engagement and the impact it could have when implemented.

Bozeman is lobbying for built-in tools and safeguards to mitigate the potential for harm from researchers using AI without appropriate consideration. He already sees a disconnect between the academic world and the public. Bridging that trust gap will require ethical uses of AI.

“We have to start rigorously including their voices in our decision-making to begin gaining trust with the public again. And with that trust, we can all start moving toward sustainable development. If we don't do that, I don't care how good our engineering solutions are, we're going to miss the boat entirely on bringing along the majority of the population.”

BBISS Support

Moving forward, Hester is excited about the impact the Brooks Byers Institute for Sustainable Systems can have on AI and sustainability research through a variety of support mechanisms.

“BBISS continues to invest in faculty development and training in community-driven research strategies, including the Community Engagement Faculty Fellows Program (with the Center for Sustainable Communities Research and Education), while empowering multidisciplinary teams to work together to solve grand engineering challenges with AI by supporting the AI+Climate Faculty Interest Group, as well as partnering with and providing administrative support for community-driven research projects.”

News Contact

Brent Verrill, Research Communications Program Manager, BBISS

Aug. 21, 2024

A new agreement between Los Alamos National Laboratory (LANL) and the National Science Foundation’s Artificial Intelligence Institute for Advances in Optimization (AI4OPT) at Georgia Tech is set to propel research in applied artificial intelligence (AI) and engage students and professionals in this rapidly growing field.

“This collaboration will help develop new AI technologies for the next generation of scientific discovery and the design of complex systems and the control of engineered systems,” said Russell Bent, scientist at Los Alamos. “At Los Alamos, we have a lot of interest in optimizing complex systems. We see an opportunity with AI to enhance system resilience and efficiency in the face of climate change, extreme events, and other challenges.”

The agreement establishes a research and educational partnership focused on advancing AI tools for a next-generation power grid. Maintaining and optimizing the energy grid involves extensive computation, and AI-informed approaches, including modeling, could address power-grid issues more effectively.

AI Approaches to Optimization and Problem-Solving

Optimization involves finding solutions that utilize resources effectively and efficiently. This research partnership will leverage Georgia Tech's expertise to develop “trustworthy foundation models” that, by incorporating AI, reduce the vast computing resources needed for solving complex problems.

In energy grid systems, optimization involves quickly sorting through possibilities and resources to deliver immediate solutions during a power-distribution crisis. The research will develop “optimization proxies” that extend current methods by incorporating broader parameters such as generator limits, line ratings, and grid topologies. Training these proxies with AI for energy applications presents a significant research challenge.

The collaboration will also address problems related to LANL’s diverse missions and applications. The team’s research will advance pioneering efforts in graph-based, physics-informed machine learning to solve Laboratory mission problems.

Outreach and Training Opportunities

In January 2025, the Laboratory will host a Grid Science Winter School and Conference, featuring lectures from LANL scientists and academic partners on electrical grid methods and techniques. With Georgia Tech as a co-organizer, AI optimization for the energy grid will be a focal point of the event.

Since 2020, the Laboratory has been working with Georgia Tech on energy grid projects. AI4OPT, which includes several industrial and academic partners, aims to achieve breakthroughs by combining AI and mathematical optimization.

“The use-inspired research in AI4OPT addresses fundamental societal and technological challenges,” said Pascal Van Hentenryck, AI4OPT director. “The energy grid is crucial to our daily lives. Our collaboration with Los Alamos advances a research mission and educational vision with significant impact for science and society.”

The three-year agreement, funded through the Laboratory Directed Research and Development program’s ArtIMis initiative, runs through 2027. It supports the Laboratory’s commitment to advancing AI. Earl Lawrence is the project’s principal investigator, with Diane Oyen and Emily Castleton joining Bent as co-principal investigators.

Bent, Castleton, Lawrence, and Oyen are also members of the AI Council at the Laboratory. The AI Council helps the Lab navigate the evolving AI landscape, build investment capacities, and forge industry and academic partnerships.

As highlighted in the Department of Energy’s Frontiers in Artificial Intelligence for Science, Security, and Technology (FASST) initiative, AI technologies will significantly enhance the contributions of laboratories to national missions. This partnership with Georgia Tech through AI4OPT is a key step towards that future.

News Contact

Breon Martin

Aug. 21, 2024

In a rapidly evolving global landscape, predicting the future of supply chains is akin to trying to catch lightning in a bottle. By examining past trends and disruptions, we can glean invaluable insights into what the future might hold and how to navigate it effectively. This article, drawing from Chris Gaffney's extensive experience in the beverage industry, explores the inherent challenges of forecasting supply chain trends, reflects on past predictions that didn't pan out, and suggests proactive strategies to stay ahead of the curve.

Introduction

Predicting the future of supply chains has always been a challenging endeavor. As someone who has spent more than 25 years in the beverage industry, I’ve witnessed firsthand how even the most well thought out predictions can miss the mark. Yet, understanding where we went wrong in the past can equip us with the tools to better anticipate and adapt to future challenges.

In this article, I want to explore the complexities of forecasting in the supply chain realm, reflect on some past predictions that didn’t quite hit the target, and suggest actionable strategies that can help us navigate the uncertainties ahead.

The Challenge of Predicting Supply Chain Trends

The supply chain, particularly in the beverage industry, is a complex web of interdependencies. As we push for innovation—from new ingredients to advanced packaging—our supply chains often struggle to keep pace. Historically, the challenges of maintaining quality, managing costs, and ensuring timely delivery have been compounded by global disruptions, technological advancements, and evolving consumer expectations.

In the 1990s, for example, the advent of RFID technology was hailed as a gamechanger, promising unparalleled visibility and efficiency. While RFID has undoubtedly transformed many aspects of supply chain management, its adoption has been slower and less impactful than originally anticipated. Similarly, the introduction of Enterprise Resource Planning (ERP) systems was expected to revolutionize the way businesses managed their operations. Yet, the promised seamless integration and real time data accuracy have often fallen short, leading to frustrations and costly implementations.

These examples highlight a critical lesson: while technological advancements hold great promise, their real-world application can be fraught with challenges that delay or dilute their impact.

Lessons from Past Predictions

One of the most striking examples of a prediction that didn’t pan out as expected is the Just in Time (JIT) manufacturing model. Initially, JIT was celebrated for its potential to minimize waste and reduce inventory costs. However, the COVID-19 pandemic exposed the vulnerabilities of this approach. As supply chains were disrupted worldwide, many companies found themselves unable to meet demand due to the lack of buffer stock. This has led to a reevaluation of the JIT model, with many businesses now looking to build more resilience into their supply chains by maintaining higher levels of inventory.

Another lesson comes from the early 2000s, when global sourcing was predicted to be the ultimate cost saving strategy. While it did lead to significant cost reductions, it also introduced new risks—ranging from quality control issues to geopolitical tensions—that have since prompted companies to reconsider the balance between cost savings and supply chain security.

The Inherent Risks of Relying on Predictions

One of the inherent risks in predicting supply chain trends is that it often leads to an overreliance on certain strategies or technologies. For instance, the push towards automation and robotics, while offering substantial benefits in terms of efficiency and cost savings, has also led to significant challenges. The initial costs, integration difficulties, and the need for upskilling workers have often been underestimated, leading to delays and unfulfilled promises.

Moreover, as we’ve seen with technologies like blockchain and AI, the hype often outpaces the reality. While these technologies have immense potential to transform supply chain management, their implementation has been slower and more complex than initially expected. This lag can create a false sense of security, leading companies to delay the adoption of alternative strategies or to underinvest in more immediately impactful areas.

Strategies for Navigating the Uncertainty

Given the inherent challenges of predicting the future, how can companies better prepare for what lies ahead? Here are a few strategies that can help:

- Embrace Flexibility and Resilience: Instead of betting on a single prediction or technology, companies should build flexibility into their supply chains. This might involve diversifying suppliers, maintaining higher inventory levels, or investing in modular production systems that can be quickly adapted to changing circumstances.

- Invest in Predictive Analytics: While past predictions have often fallen short, advances in AI and machine learning are making it possible to better anticipate supply chain disruptions and demand fluctuations. By investing in predictive analytics, companies can gain more accurate insights into future trends and make more informed decisions.

- Foster Stronger Relationships with Partners: As supply chains become more complex and globalized, the importance of strong relationships with suppliers and partners cannot be overstated. By working closely with partners, companies can ensure better alignment of goals, improved quality control, and more effective collaboration in the face of disruptions.

- Prioritize Sustainability: As consumer expectations shift towards more sustainable products, companies that prioritize sustainability in their supply chains will be better positioned to meet future demand. This might involve investing in sustainable sourcing practices, reducing waste, or adopting circular economy principles.

- Continual Learning and Adaptation: Finally, companies should foster a culture of continual learning and adaptation. By staying informed about the latest trends, technologies, and best practices, businesses can more effectively navigate the uncertainties of the future and seize new opportunities as they arise.

Conclusion

Predicting the future of supply chains is a daunting task, but it’s one that we must continually strive to master. By learning from past mistakes and adopting a proactive, flexible approach, we can better navigate the challenges ahead and turn potential disruptions into opportunities for growth and innovation. As we look to the future, let’s remember that while predictions can guide us, it’s our ability to adapt and respond to the unexpected that will ultimately determine our success.

FAQ

What are the biggest challenges in predicting supply chain trends?

The biggest challenges include the complexity of global supply chains, the rapid pace of technological change, and the unpredictable nature of global disruptions. These factors make it difficult to accurately forecast future trends and adapt to new developments.

How can companies build more resilient supply chains?

Companies can build more resilient supply chains by diversifying their suppliers, maintaining higher inventory levels, investing in flexible production systems, and fostering strong relationships with partners. Additionally, leveraging predictive analytics can help companies anticipate disruptions and respond more effectively.

What role does technology play in modern supply chains?

Technology plays a critical role in modern supply chains, offering tools for real-time tracking, predictive analytics, and automation. However, the implementation of new technologies often comes with challenges, such as high costs and integration difficulties, which must be carefully managed.

Why is sustainability important in supply chain management?

Sustainability is increasingly important as consumers demand more environmentally friendly products. Companies that prioritize sustainability in their supply chains can reduce waste, improve efficiency, and better meet the expectations of consumers and regulators.

How can companies stay ahead of future supply chain challenges?

To stay ahead, companies should embrace flexibility, invest in new technologies, foster strong partnerships, prioritize sustainability, and continually adapt to new developments. Staying informed about industry trends and best practices is also crucial.

What lessons can be learned from past supply chain disruptions?

Past disruptions, such as the COVID-19 pandemic, have highlighted the importance of resilience, flexibility, and strong partnerships. Companies that learn from these events and adapt their strategies accordingly will be better positioned to navigate future challenges.

Chris Gaffney, SCL Managing Director

Aug. 20, 2024

For three days, a cybercriminal unleashed a crippling ransomware attack on the futuristic city of Northbridge. The attack shut down the city’s infrastructure and severely impacted public services, until Georgia Tech cybersecurity experts stepped in to stop it.

This scenario played out this weekend at the DARPA AI Cyber Challenge (AIxCC) semi-final competition held at DEF CON 32 in Las Vegas. Team Atlanta, which included the Georgia Tech experts, were among the contest’s winners.

Team Atlanta will now compete against six other teams in the final round that takes place at DEF CON 33 in August 2025. The finalists will keep their AI system and improve it over the next 12 months using the $2 million semi-final prize.

The AI systems in the finals must be open sourced and ready for immediate, real-world launch. The AIxCC final competition will award a $4 million grand prize to the ultimate champion.

Team Atlanta is made up of past and present Georgia Tech students and was put together with the help of SCP Professor Taesoo Kim. Not only did the team secure a spot in the final competition, they found a zero-day vulnerability in the contest.

“I am incredibly proud to announce that Team Atlanta has qualified for the finals in the DARPA AIxCC competition,” said Taesoo Kim, professor in the School of Cybersecurity and Privacy and a vice president of Samsung Research.

“This achievement is the result of exceptional collaboration across various organizations, including the Georgia Tech Research Institute (GTRI), industry partners like Samsung, and international academic institutions such as KAIST and POSTECH.”

After noticing discrepancies in the competition score board, the team discovered and reported a bug in the competition itself. The type of vulnerability they discovered is known as a zero-day vulnerability, because vendors have zero days to fix the issue.

While this didn’t earn Team Atlanta additional points, the competition organizer acknowledged the team and their finding during the closing ceremony.

“Our team, deeply rooted in Atlanta and largely composed of Georgia Tech alumni, embodies the innovative spirit and community values that define our city,” said Kim.

“With over 30 dedicated students and researchers, we have demonstrated the power of cross-disciplinary teamwork in the semi-final event. As we advance to the finals, we are committed to pushing the boundaries of cybersecurity and artificial intelligence, and I firmly believe the resulting systems from this competition will transform the security landscape in the coming year!”

The team tested their cyber reasoning system (CRS), dubbed Atlantis, on software used for data management, website support, healthcare systems, supply chains, electrical grids, transportation, and other critical infrastructures.

Atlantis is a next-generation, bug-finding and fixing system that can hunt bugs in multiple coding languages. The system immediately issues accurate software patches without any human intervention.

AIxCC is a Pentagon-backed initiative that was announced in August 2023 and will award up to $20 million in prize money throughout the competition. Team Atlanta was among the 42 teams that qualified for the semi-final competition earlier this year.

News Contact

John Popham

Communications Officer II at the School of Cybersecurity and Privacy

Aug. 19, 2024

Nylon, Teflon, Kevlar. These are just a few familiar polymers — large-molecule chemical compounds — that have changed the world. From Teflon-coated frying pans to 3D printing, polymers are vital to creating the systems that make the world function better.

Finding the next groundbreaking polymer is always a challenge, but now Georgia Tech researchers are using artificial intelligence (AI) to shape and transform the future of the field. Rampi Ramprasad’s group develops and adapts AI algorithms to accelerate materials discovery.

This summer, two papers published in the Nature family of journals highlight the significant advancements and success stories emerging from years of AI-driven polymer informatics research. The first, featured in Nature Reviews Materials, showcases recent breakthroughs in polymer design across critical and contemporary application domains: energy storage, filtration technologies, and recyclable plastics. The second, published in Nature Communications, focuses on the use of AI algorithms to discover a subclass of polymers for electrostatic energy storage, with the designed materials undergoing successful laboratory synthesis and testing.

“In the early days of AI in materials science, propelled by the White House’s Materials Genome Initiative over a decade ago, research in this field was largely curiosity-driven,” said Ramprasad, a professor in the School of Materials Science and Engineering. “Only in recent years have we begun to see tangible, real-world success stories in AI-driven accelerated polymer discovery. These successes are now inspiring significant transformations in the industrial materials R&D landscape. That’s what makes this review so significant and timely.”

AI Opportunities

Ramprasad’s team has developed groundbreaking algorithms that can instantly predict polymer properties and formulations before they are physically created. The process begins by defining application-specific target property or performance criteria. Machine learning (ML) models train on existing material-property data to predict these desired outcomes. Additionally, the team can generate new polymers, whose properties are forecasted with ML models. The top candidates that meet the target property criteria are then selected for real-world validation through laboratory synthesis and testing. The results from these new experiments are integrated with the original data, further refining the predictive models in a continuous, iterative process.

While AI can accelerate the discovery of new polymers, it also presents unique challenges. The accuracy of AI predictions depends on the availability of rich, diverse, extensive initial data sets, making quality data paramount. Additionally, designing algorithms capable of generating chemically realistic and synthesizable polymers is a complex task.

The real challenge begins after the algorithms make their predictions: proving that the designed materials can be made in the lab and function as expected and then demonstrating their scalability beyond the lab for real-world use. Ramprasad’s group designs these materials, while their fabrication, processing, and testing are carried out by collaborators at various institutions, including Georgia Tech. Professor Ryan Lively from the School of Chemical and Biomolecular Engineering frequently collaborates with Ramprasad’s group and is a co-author of the paper published in Nature Reviews Materials.

"In our day-to-day research, we extensively use the machine learning models Rampi’s team has developed,” Lively said. “These tools accelerate our work and allow us to rapidly explore new ideas. This embodies the promise of ML and AI because we can make model-guided decisions before we commit time and resources to explore the concepts in the laboratory."

Using AI, Ramprasad’s team and their collaborators have made significant advancements in diverse fields, including energy storage, filtration technologies, additive manufacturing, and recyclable materials.

Polymer Progress

One notable success, described in the Nature Communications paper, involves the design of new polymers for capacitors, which store electrostatic energy. These devices are vital components in electric and hybrid vehicles, among other applications. Ramprasad’s group worked with researchers from the University of Connecticut.

Current capacitor polymers offer either high energy density or thermal stability, but not both. By leveraging AI tools, the researchers determined that insulating materials made from polynorbornene and polyimide polymers can simultaneously achieve high energy density and high thermal stability. The polymers can be further enhanced to function in demanding environments, such as aerospace applications, while maintaining environmental sustainability.

“The new class of polymers with high energy density and high thermal stability is one of the most concrete examples of how AI can guide materials discovery,” said Ramprasad. “It is also the result of years of multidisciplinary collaborative work with Greg Sotzing and Yang Cao at the University of Connecticut and sustained sponsorship by the Office of Naval Research.”

Industry Potential

The potential for real-world translation of AI-assisted materials development is underscored by industry participation in the Nature Reviews Materials article. Co-authors of this paper also include scientists from Toyota Research Institute and General Electric. To further accelerate the adoption of AI-driven materials development in industry, Ramprasad co-founded Matmerize Inc., a software startup company recently spun out of Georgia Tech. Their cloud-based polymer informatics software is already being used by companies across various sectors, including energy, electronics, consumer products, chemical processing, and sustainable materials.

“Matmerize has transformed our research into a robust, versatile, and industry-ready solution, enabling users to design materials virtually with enhanced efficiency and reduced cost,” Ramprasad said. “What began as a curiosity has gained significant momentum, and we are entering an exciting new era of materials by design.”

News Contact

Tess Malone, Senior Research Writer/Editor

tess.malone@gatech.edu

Aug. 12, 2024

Interdisciplinary collaboration drives innovation at Georgia Tech. Researchers with joint appointments across the Institute's six colleges discuss how blending diverse fields helps them create more sustainable, technologically advanced, and socially viable solutions to some of our planet’s biggest problems. Learn more