Feb. 21, 2025

As CREATE-X enters 2025, the program is more committed than ever to helping students build the future they envision. Through workshops, courses, mentorship, and support, CREATE-X provides a low-risk environment where students can tackle real-world problems and develop solutions.

So far, we have helped launch more than 650 startups by founders from over 38 majors, seen eight founders featured in the Forbes 30 Under 30 Atlanta listing this year, and generated a total portfolio valuation of over $2.4 billion.

Startup Launch, our 12-week summer startup accelerator, continues to be a cornerstone of student entrepreneurship at Georgia Tech, and the program is expanding to accommodate additional teams. Students are encouraged to apply and can even use course projects for their startups. Teams can be in any stage of development and must have at least one Georgia Tech faculty member, alumnus, or current student. Solopreneurs are also accepted. Apply to this summer’s Startup Launch by Monday, March 17.

Benefits of Startup Launch

- Education and Mentorship: Learn from experienced entrepreneurs and business experts.

- Financial Support: Receive $5,000 in optional seed funding and $150,000 of in-kind services like legal counsel and accounting.

- Networking Opportunities: Access a rich entrepreneurial network that will last a lifetime.

- Time in Front of Investors: Participate in Demo Day, Tech’s premier startup showcase, which attracts over 1,500 attendees annually, including investors, business and government leaders, field experts, and potential customers.

- Hands-On Experience: Fully immerse yourself in the startup process and intern for yourself.

- Skill Development: Build skills and confidence that will benefit you in any career path.

Rahul Saxena, director of CREATE-X, encouraged students not to feel like they have to be 100% ready before applying.

“We have seen classroom projects and hackathon teams move on to build very successful startups, even though they weren't originally thinking about it,” he said. “Students should apply for Launch to explore the possibility of it with whatever project they are doing now. You never know what could come of it.”

CREATE-X uses rolling admissions, so apply to Startup Launch today to increase your chances of acceptance. CREATE-X believes in producing quickly, failing fast, and iterating again. The team offers feedback to all applicants and encourages them to submit, even if they’re not entirely sure about their application. A previous info session on Startup Launch and a Startup Launch sample application are available to help students prepare. Attend CREATE-X events to get insights into entrepreneurship, workshop business ideas, find teammates, and prepare your Startup Launch applications. For additional questions, email create-x@groups.gatech.edu.

News Contact

Breanna Durham

Marketing Strategist

Feb. 20, 2025

Following a nationwide search, Georgia Tech President Ángel Cabrera has named Timothy Lieuwen the Executive Vice President for Research (EVPR). Lieuwen has served as interim EVPR since September 10, 2024.

“Tim’s ability to bridge academia, industry, and government has been instrumental in driving innovation and positioning Georgia Tech as a critical partner in tackling complex global challenges,” said Cabrera. “With his leadership, I am confident Georgia Tech will continue to expand its impact, strengthen its strategic collaborations, and further solidify its reputation as a world leader in research and innovation.”

A proud Georgia Tech alumnus (M.S. ME 1997, Ph.D. ME 1999), Lieuwen has spent more than 25 years at the Institute. He is a Regents’ Professor and holds the David S. Lewis, Jr. Chair in the Daniel Guggenheim School of Aerospace Engineering. Prior to the interim EVPR role, Lieuwen served as executive director of the Strategic Energy Institute for 12 years. His expertise spans energy, propulsion, energy policy, and national security, and he has worked closely with industry and government to develop new knowledge and see its implementation in the field.

Lieuwen has been widely recognized for his contributions to research and innovation. He is a member of the National Academy of Engineering, as well as a fellow of multiple other professional organizations. Recently, he was elected an International Fellow of the U.K.’s Royal Academy of Engineering, one of only three U.S. engineers in 2024 to receive this prestigious commendation. The honor acknowledges Lieuwen’s contributions to engineering and his efforts to advance research, education initiatives, and industry collaborations.

He has authored or edited four books, published over 400 scientific articles, and holds nine patents — several of which are licensed to industry. He also founded TurbineLogic, an analytics firm working in the energy industry. Additionally, Lieuwen serves on governing and advisory boards for three Department of Energy national labs and was appointed by the U.S. Secretary of Energy to the National Petroleum Council.

The EVPR is the Institute’s chief research officer and directs Georgia Tech’s $1.37 billion portfolio of research, development, and sponsored activities. This includes leadership of the Georgia Tech Research Institute, the Enterprise Innovation Institute, nine Interdisciplinary Research Institutes and numerous associated research centers, and related research administrative support units: commercialization, corporate engagement, research development and operations, and research administration.

“I am honored to step into this role at a time when research and innovation have never been more critical,” Lieuwen said. “Georgia Tech’s research enterprise is built on collaboration — across disciplines, across industries, and across communities. Our strength lies not just in the breakthroughs we achieve, but in how we translate them into real-world impact.

“My priority is to put people first — empowering our researchers, students, and partners to push boundaries, scale our efforts, and deepen our engagement across Georgia and beyond. Together, we will expand our reach, accelerate discovery, and ensure that Georgia Tech remains a driving force for progress and service.”

News Contact

Shelley Wunder-Smith | Director of Research Communications

shelley.wunder-smith@research.gatech.edu

Feb. 20, 2025

In today's data-driven world, supply chain professionals and business leaders are increasingly required to leverage analytics to drive decision-making. As companies invest in building data capabilities, one critical question emerges: Which programming language is best for supply chain analytics—Python or R?

Both Python and R have strong footholds in the analytics space, each with unique advantages. However, industry trends suggest a growing shift toward Python as the dominant tool for data science, machine learning, and enterprise applications. While R remains valuable in specific statistical and academic contexts, businesses must carefully assess which language aligns best with their analytics goals and workforce development strategies.

This article explores the strengths of each language and provides guidance for industry professionals looking to make informed decisions about which to prioritize for their teams.

Why Python Is Gaining Industry-Wide Adoption

1. Versatility and Scalability for Business Applications

Python has evolved into a comprehensive tool that extends beyond traditional analytics into automation, optimization, artificial intelligence, and supply chain modeling. Its key advantages include:

- Scalability: Python handles large-scale data processing and integrates seamlessly with cloud computing environments.

- Machine Learning and AI: Python’s ecosystem includes powerful machine learning libraries like scikit-learn, TensorFlow, and PyTorch.

- Integration Capabilities: Python works well with databases, APIs, and ERP systems, embedding analytics into operational workflows.

2. Workforce Readiness and Talent Development

From a talent perspective, Python is becoming the preferred programming language for data science and analytics roles. Surveys indicate that Python is used in 67% to 90% of analytics-related jobs, making it a crucial skill for professionals. Employers benefit from:

- A larger talent pool of Python-proficient professionals.

- A lower barrier to entry for new employees learning data analytics.

- The ability to streamline analytics processes across different functions.

3. Industry Adoption in Supply Chain Analytics

Python is widely adopted in logistics, manufacturing, and supply chain optimization due to its ability to handle:

- Demand forecasting and inventory optimization.

- Network modeling and simulation.

- Automation of data pipelines and reporting.

- Predictive maintenance and anomaly detection.

Why R Still Has a Place in Analytics

Despite Python’s widespread adoption, R remains a valuable tool in certain business contexts, particularly in statistical modeling and research applications. R’s strengths include:

- Advanced Statistical Analysis: R was designed for statisticians and remains a leader in econometrics and experimental design.

- Robust Visualization Capabilities: Packages like ggplot2 and Shiny make R a preferred choice for creating high-quality visualizations.

- Adoption in Public Sector and Academic Research: Many government agencies and research institutions continue to rely on R.

Strategic Considerations: Choosing Between Python and R

1. Business Needs and Analytics Maturity

- For companies focused on predictive analytics, automation, and AI, Python is the best choice.

- For organizations conducting deep statistical research or working with legacy R code, maintaining some R capabilities may be necessary.

2. Workforce Training and Skill Development

- Companies investing in analytics training should prioritize Python to align with industry trends.

- If statistical expertise is a core requirement, R may still play a supporting role in niche applications.

3. Tool and System Integration

- Python integrates more seamlessly with enterprise software, making it easier to operationalize analytics.

- R is often more specialized and may require additional effort to connect with business intelligence platforms.

4. Future Trends and Technology Evolution

- Python’s rapid growth suggests it will continue to dominate in analytics and AI.

- While R remains relevant, its role is becoming more specialized.

Final Thoughts: A Pragmatic Approach to Analytics Development

For most organizations, Python represents the future of analytics, offering the broadest capabilities, strongest industry adoption, and easiest integration into enterprise systems. However, R remains useful in specialized statistical applications and legacy environments.

A balanced approach might involve training teams in Python as the primary analytics language while maintaining an awareness of R for niche use cases. The key takeaway for business leaders is not just about choosing a programming language but ensuring their teams develop strong analytical problem-solving skills that transcend specific tools.

By strategically aligning analytics capabilities with business goals, organizations can build a more data-driven, adaptable, and future-ready workforce.

Feb. 19, 2025

Years ago, I wrote a short and very simplistic post that can help explain why a country (or for that matter, any group of people) can run a trade deficit with another country (or again, any other group of people) and still grow their welfare (economy, wealth, etc.) faster than the other country. You can find it here. The post makes a number of basic points using a simple example. I’ll also repeat here that, these years later, I’m still not an economist and I’m not otherwise an expert on certain aspects of international trade. However, I am someone who thinks quite a bit about supply chains and thus, given the configuration of the modern global economy, I do think about international trade and transportation and the potential impact of various import tariffs on supply chains.

First, here is an update on the scale of international trade and its role within the US economy. I’ll use official trade statistics provided by the US Census Bureau. If we look at the trade of physical goods which is the first thing that most people think about when it comes to trade, the US imported US$3.112 trillion worth of goods in FY2023. That is simply a lot of stuff. Note that imported goods can be finished products that are distributed (eventually) through various retail channels to end consumers. But they can also be various inputs to production: supplies, components, or work-in-progress inventory that feeds US manufacturing enterprises. A very good example along these lines is Canadian heavy crude oil, shipped to US petroleum refineries as the key input to the production of refined petrochemicals like gasoline, jet fuel, and other products. You can read elsewhere why the US currently imports heavy crude from Canada when it (already) produces more crude oil than it consumes each year and is thus (already) a net exporter.

Most US consumers understand that large parts of our economy rely on imported goods. Fewer might think about the sheer scale of the US goods export economy. Looking again at FY2023, the US exported US$2.051 trillion worth of goods (includng some of that aforementioned US-drilled crude oil). Wow, again, that is a lot of stuff. But it is true that the balance of trade here currently favors imports over exports. Since we import more goods value than we export, we ran a goods trade deficit with the rest of the world of US$1.061 trillion in FY2023.

A large part of the US economy today is the provision of services and not goods. There are all sorts of services: food service, financial services, educational services, transportation services, consulting services, and so on. And the US does trade in services as well, both importing services from foreign providers while exporting services to foreign customers. In fact, the US ran a trade surplus in services of US$288 billion which reduced the overall net trade deficit to US$773 billion in FY2023.

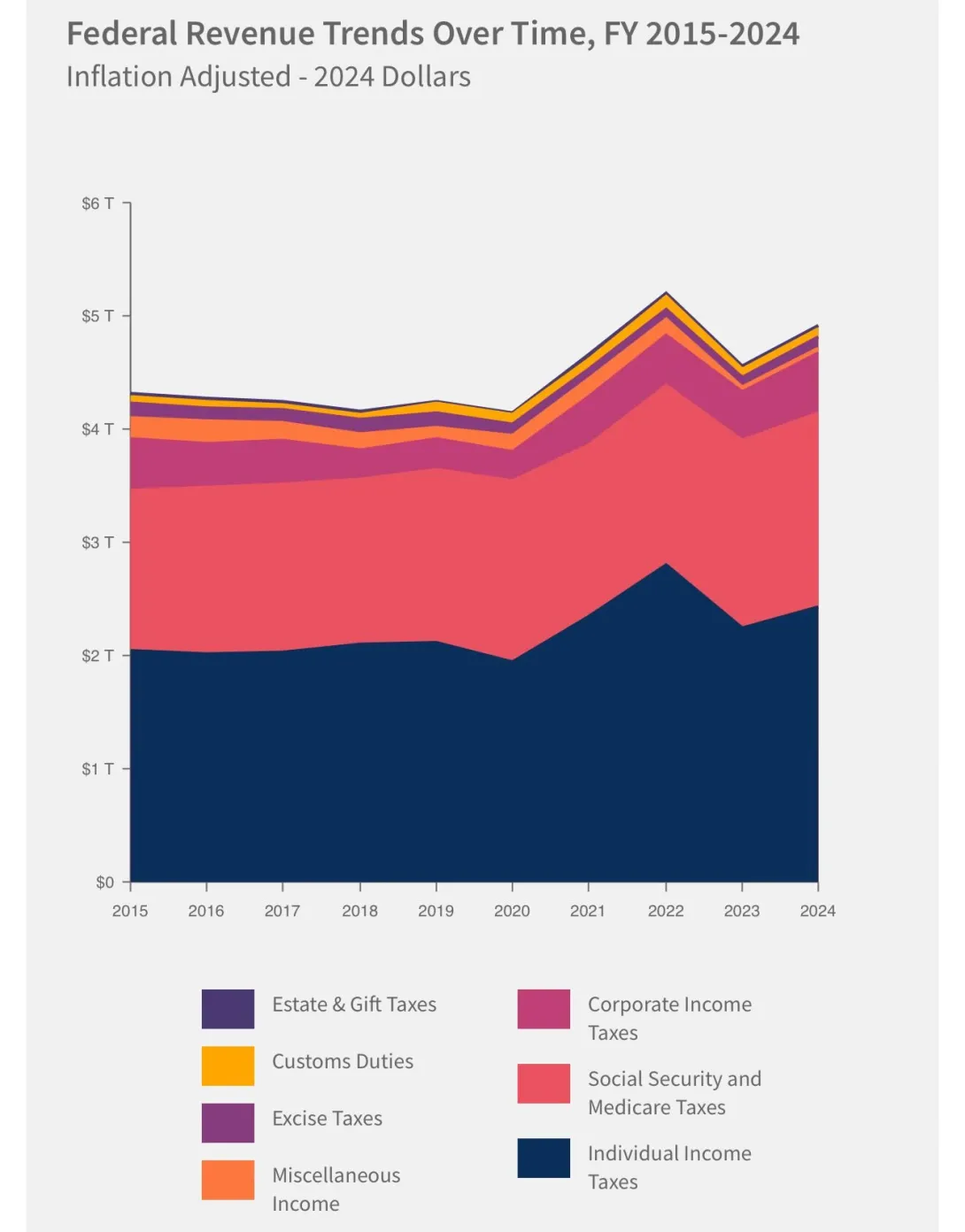

Now let’s discuss tariffs for a bit, and let’s consider duties on imported goods. If the US places a 10% tariff on a bundle of goods (perhaps a specific category of goods from a specific set of countries), then importers of those goods must pay a customs duty on the declared goods before they can be moved into the US (so-called customs-clearing). As many have noted already, these importers-of-record are firms doing business in the US (or individuals) that have arranged for the importation. Examples of such importers include retailers like Walmart and producers like Ford and ExxonMobil. Customs duties collected go into the US Treasury, similar to personal income taxes, social security and Medicare taxes, and corporate income taxes. However, the fraction of US government revenue raised by tariffs has been very small for a long period of time. In FY2023, the total collected customs duties by the US Treasury was about US$80 billion. In fact, FY2023 trade was down a bit from FY2022 when total goods imports were US$3.35 trillion and total collected duties were US$112 billion, or an average duty of about 3.3%.

So, how much revenue could be raised by new tariffs? Let’s imagine a strange world where new US import duties did not distort the economy in any way: the same value of goods is assumed to be imported even though both demand for those goods would likely adjust and the purchasing power of each US$ might increase. If the average duty were increased to 10%, the total revenue produced to the US Treasury in FY2023 would have been US$311 billion. How about a 25% average tariff? Well, of course, US$778 billion. For comparison, the US Treasury received US$2.43 trillion in personal and US$530 billion in corporate income taxes in FY2023, an amount nearly equivalent to a universal 100% tariff on the imported goods value basis for all imported goods. The tiny yellow sliver in the figure below shows how little total customs duty revenue has been collected over time and how little changed it has been compared to other revenue sources.

Like any other tax, a tariff can be useful to governments as they seek to design mechanisms to fund (important) government activities while distorting economic activity to favor or disfavor various groups of people, businesses, investors, industries, nations, regions etc. It’s also safe to say that, like any other tax, it can be difficult to determine how economic activity will be specifically distorted by any specific tariffs. In fact, it may be more difficult with tariffs for a few reasons. The first is that unlike a sales tax, a tariff on imported goods occurs upstream of the point-of-sale. Instead, tariffs create increases in supply chain costs for importers, and the impact of tariffs on consumers depends on what happens as a result of these cost increases.

First, it should be noted that some supply chain cost increases cannot be borne at all and can lead to the elimination of some products in the marketplace. Why? A cost increase can lead a producer to decide that a product cannot be profitably produced and marketed, and this is true even if a replacement supply source with a lower (tariff-inclusive) cost of supply can be identified. A retailer may make a similar decision for an imported product. If producers or retailers continue to keep a product in the market, they could decide to lower its quality in some way or to pass on portions of the cost increase directly to its customers. But the supply chain cost persists; perhaps a different supplier could be identified not subject to the tariff, but if that supplier were already providing the same input at the same quality for a lower price they would be used already. Since profitability is likely to be impacted, owners and investors as well as employees of the importer will also likely to be impacted. These interactions are all naturally somewhat complex and the outcome is difficult to predict.

I’ll finish with a thought. If a government wishes to use new tariffs to yield a political outcome beyond simply raising revenue, they will likely need to be designed to produce a significant (and noticeable) distortion to some portion of the economy. If the distortion is mild, no change of behavior seems likely to occur. It seems as if the US is about to attempt some new experimentation with tariffs to both influence the behavior of trade partner nations and to create a significant government revenue source. We will likely get to see firsthand what kind of economic distortion they induce.

News Contact

Feb. 18, 2025

Georgia Tech strives to cultivate thought leaders, advance knowledge, and solve societal challenges by embracing various aspects of the research ecosystem. Through the HBCU/MSI Research Initiative, Georgia Tech seeks to capture data surrounding its research impact in the Georgia Tech-HBCU Research Collaboration Data Dashboard. The dashboard allows users to see information regarding joint funding, publications, hubs, and awards won by the HBCU CHIPS Network, which is co-led by Georgia Tech.

“The data dashboard will represent a key resource for both Georgia Tech and HBCU researchers seeking to enhance research collaboration while substantiating Georgia Tech’s commitment as a valued partner,” said George White, senior director for strategic partnerships.

The Georgia Tech-HBCU Research Collaboration Data Dashboard will serve as a point of reference for faculty and staff in the various departments and colleges to identify opportunities of mutual benefits for collaboration and partnership.

To view the dashboard, visit https://hbcumsi.research.gatech.edu/data-dashboard

News Contact

Taiesha Smith

Sr. Program Manager, HBCU-MSI Research Partnerships

Feb. 18, 2025

When Air Force veteran Michael Trigger began looking for a new career in 2022, he became fascinated by artificial intelligence (AI). Trigger, who left the military in 1989 and then worked in telecommunications, corrections, and professional trucking, learned about an AI-enhanced robotics manufacturing program at the VECTR Center. This training facility in Warner Robins, Georgia, helps veterans transition into new careers. In 2024, he enrolled and learned how to program and operate robots.

As part of the class, Trigger made several trips to the Georgia Tech Manufacturing Institute (GTMI). When the faculty asked if anyone wanted an internship, Trigger raised his hand.

“Coming to Georgia Tech allowed me to clarify what I wanted to do,” he said. “I’ve always been in service-based jobs, but I was interested in additive manufacturing,” or 3D printing.

For five months every weekday, Trigger drove from his home in Macon to Georgia Tech’s campus for his internship. The paid internship took place at Tech’s Advanced Manufacturing Pilot Facility (AMPF). This 20,000-square-foot, reconfigurable facility serves as the research and development arm of GTMI, functioning as a teaching laboratory, technology test bed, and workforce development space for manufacturing innovations.

During his time there, Trigger focused on computer-aided manufacturing and met with faculty and students to learn about their research. The internship wasn’t convenient, but it was worth it.

“From our campus visits, I understood the mission of AMPF, so the fact they offered me this opportunity was huge for me,” he said. “The internship had a big impact on my life in terms of the technical and soft skills I gained.”

Building the Workforce

Launching new careers is just one of AMPF’s goals in testing new manufacturing and growing the future U.S. workforce. Since 2022, AMPF has improved the manufacturing process at all parts of the talent pipeline — from giving corporate researchers space to test and adopt AI automation technologies to training and upskilling their employees. Collectively, GTMI and AMPF’s efforts have led to a stronger, bigger network of manufacturers that other companies and the U.S. government can rely on.

“We are going to need to manufacture more in the U.S. — from computer chips to cars — so we want to create jobs and fill them,” said Tom Kurfess, GTMI’s executive director. “We need more people working in the manufacturing sector, and we've got to make these jobs better and make people more efficient in them.”

AI is one way to boost efficiency, but artificial intelligence won’t cut humans out of the process entirely. Rather, people will be integral to monitoring the systems and advancing them. As AI becomes more widely adopted, a college degree won’t necessarily be required to work in the AI field.

“Our workforce is going to need the next generation of employees to be amenable to retraining as the technology updates,” said Aaron Stebner, a co-director of the Georgia Artificial Intelligence Manufacturing program (AIM). A statewide program, Georgia AIM helps fund AMPF and sponsored Trigger’s internship. “Education is going to be more of a lifelong learning process, and Georgia Tech can be at the forefront of that.”

While GTMI already integrates AI into many processes, it remains committed to staying ahead of the curve with the latest technologies that could boost manufacturing. The facility is in the process of an expansion that will nearly triple its size and make AMPF the leading facility for demonstrating what a hyperconnected and AI-driven manufacturing enterprise looks like. This will enable GTMI to build and sustain these educational pipelines, which is key to its work.

“We’re developing the workforce for the future, not of the future,” explained Donna Ennis, a co-director of Georgia AIM. “It’s AI today, but it could be something else five years from now. We are focused on creating a highly skilled, resilient workforce.”

Part of Georgia AIM’s role is creating the pipelines that people like Trigger can follow. From bringing a mobile lab to technical colleges to hosting robotics competitions at schools, these efforts span the state of Georgia and touch populations from “K to gray.”

“Kids don’t say they want to be a manufacturer when they grow up, but that’s because they don’t know it’s a viable career path,” Ennis said. “We’re making manufacturing cool again.”

Creating Corporate Connection

To create these job opportunities, GTMI is also partnering with corporations. Companies can join a consortium to access the AMPF research facilities and collaborate with researchers. Any size or type of company can take advantage of AMPF facilities — from corporations including AT&T and Siemens to small startups like Alegna, which licenses and commercializes Navy research.

“The ability to manufacture domestically is critical, not only for national security purposes, but also to keep the U.S. economically competitive,” said Steven Ferguson, a principal research scientist and executive director for the GT Manufacturing 4.0 Consortium. “Having the AMPF puts Georgia Tech within the innovation epicenter for these areas and will help us reshore manufacturing.”

The benefit of such an arrangement is twofold. Companies can work with the newest manufacturing technologies and make their own advances, and Georgia Tech builds a network of manufacturers across the state and world that students can work with. For example, AT&T uses the AMPF to test sensors for expanding personal 5G networks, and George W. Woodruff School of Mechanical Engineering Professor Carolyn Seepersad has Ph.D. students funded by a Siemens partnership through AMPF.

Trigger was able to connect and collaborate with some of these corporations and researchers during his internship. “I told them about my interest in machine learning because I wanted to see how they were integrating machine learning into their research projects,” he said. “All of them invited me to come by to observe and be part of the research.”

Starting a New Path

Because of his research collaborations during his AMPF internship, Trigger now has a new focus. “The internship clarified for me that AI is where everybody is going,” he explained. He wants to be at the forefront of AI manufacturing and hopes to pursue a certificate in machine learning next.

While he knows he still has much to learn, AMPF gave Trigger a foot in the door and confidence about the future. He — and other veterans like him — will help build the workforce that propels America forward in manufacturing.

News Contact

Tess Malone, Senior Research Writer/Editor

tess.malone@gatech.edu

Feb. 17, 2025

Men and women in California put their lives on the line when battling wildfires every year, but there is a future where machines powered by artificial intelligence are on the front lines, not firefighters.

However, this new generation of self-thinking robots would need security protocols to ensure they aren’t susceptible to hackers. To integrate such robots into society, they must come with assurances that they will behave safely around humans.

It begs the question: can you guarantee the safety of something that doesn’t exist yet? It’s something Assistant Professor Glen Chou hopes to accomplish by developing algorithms that will enable autonomous systems to learn and adapt while acting with safety and security assurances.

He plans to launch research initiatives, in collaboration with the School of Cybersecurity and Privacy and the Daniel Guggenheim School of Aerospace Engineering, to secure this new technological frontier as it develops.

“To operate in uncertain real-world environments, robots and other autonomous systems need to leverage and adapt a complex network of perception and control algorithms to turn sensor data into actions,” he said. “To obtain realistic assurances, we must do a joint safety and security analysis on these sensors and algorithms simultaneously, rather than one at a time.”

This end-to-end method would proactively look for flaws in the robot’s systems rather than wait for them to be exploited. This would lead to intrinsically robust robotic systems that can recover from failures.

Chou said this research will be useful in other domains, including advanced space exploration. If a space rover is sent to one of Saturn’s moons, for example, it needs to be able to act and think independently of scientists on Earth.

Aside from fighting fires and exploring space, this technology could perform maintenance in nuclear reactors, automatically maintain the power grid, and make autonomous surgery safer. It could also bring assistive robots into the home, enabling higher standards of care.

This is a challenging domain where safety, security, and privacy concerns are paramount due to frequent, close contact with humans.

This will start in the newly established Trustworthy Robotics Lab at Georgia Tech, which Chou directs. He and his Ph.D. students will design principled algorithms that enable general-purpose robots and autonomous systems to operate capably, safely, and securely with humans while remaining resilient to real-world failures and uncertainty.

Chou earned dual bachelor’s degrees in electrical engineering and computer sciences as well as mechanical engineering from University of California Berkeley in 2017, a master’s and Ph.D. in electrical and computer engineering from the University of Michigan in 2019 and 2022, respectively. He was a postdoc at MIT Computer Science & Artificial Intelligence Laboratory prior to joining Georgia Tech in November 2024. He is a recipient of the National Defense Science and Engineering Graduate fellowship program, NSF Graduate Research fellowships, and was named a Robotics: Science and Systems Pioneer in 2022.

News Contact

John (JP) Popham

Communications Officer II

College of Computing | School of Cybersecurity and Privacy

Feb. 11, 2025

Georgia Tech researchers have developed a groundbreaking 3D-printed, bioresorbable heart valve that promotes tissue regeneration, potentially eliminating the need for repeated surgeries and offering a transformative solution for both adult and pediatric heart patients.

Feb. 10, 2025

When Ashley Cotsman arrived as a freshman at Georgia Tech, she didn’t know how to code. Now, the fourth-year Public Policy student is leading a research project on AI and decarbonization technologies.

When Cotsman joined the Data Science and Policy Lab as a first-year student, “I had zero skills or knowledge in big data, coding, anything like that,” she said.

But she was enthusiastic about the work. And the lab, led by Associate Professor Omar Asensio in the School of Public Policy, included Ph.D., master’s, and undergraduate students from a variety of degree programs who taught Cotsman how to code on the fly.

She learned how to run simple scripts and web scrapes and assisted with statistical analyses, policy research, writing, and editing. At 19, Cotsman was published for the first time. Now, she’s gone from mentee to mentor and is leading one of the research projects in the lab.

“I feel like I was just this little freshman who had no clue what I was doing, and I blinked, and now I’m conceptualizing a project and coming up with the research design and writing — it’s a very surreal moment,” she said.

Cotsman, right, presenting a research poster on electric vehicle charging infrastructure, another project she worked on with Asensio and the Data Science and Policy Lab.

What’s the project about?

Cotsman’s project. “Scaling Sustainability Evaluations Through Generative Artificial Intelligence.” uses the large language model GPT-4 to analyze the sea of sustainability reports organizations in every sector publish each year.

The authors, including Celina Scott-Buechler at Stanford University, Lucrezia Nava at University of Exeter, David Reiner at University of Cambridge Judge Business School and Asensio, aim to understand how favorability toward decarbonization technologies vary by industry and over time.

“There are thousands of reports, and they are often long and filled with technical jargon,” Cotsman said. “From a policymaker’s standpoint, it’s difficult to get through. So, we are trying to create a scalable, efficient, and accurate way to quickly read all these reports and get the information.”

How is it done?

The team trained a GPT-4 model to search, analyze, and see trends across 95,000 mentions of specific technologies over 25 years of sustainability reports. What would take someone 80 working days to read and evaluate took the model about eight hours, Cotsman said. And notably, GPT-4 did not require extensive task-specific training data and uniformly applied the same rules to all the data it analyzed, she added.

So, rather than fine-tuning with thousands of human-labeled examples, “it’s more like prompt engineering,” Cotsman said. “Our research demonstrates what logic and safeguards to include in a prompt and the best way to create prompts to get these results.”

The team used chain-of-thought prompting, which guides generative AI systems through each step of its reasoning process with context reasoning, counterexamples, and exceptions, rather than just asking for the answer. They combined this with few-shot learning for misidentified cases, which provides increasingly refined examples for additional guidance, a process the AI community calls “alignment.”

The final prompt included definitions of favorable, neutral, and opposing communications, an example of how each might appear in the text, and an example of how to classify nuanced wording, values, or human principles as well.

It achieved a .86 F1 score, which essentially measures how well the model gets things right on a scale from zero to one. The score is “very high” for a project with essentially zero training data and a specialized dataset, Cotsman said. In contrast, her first project with the group used a large language model called BERT and required 9,000 lines of expert-labeled training data to achieve a similar F1 score.

“It’s wild to me that just two years ago, we spent months and months training these models,” Cotsman said. “We had to annotate all this data and secure dedicated compute nodes or GPUs. It was painstaking. It was expensive. It took so long. And now, two years later, here I am. Just one person with zero training data, able to use these tools in such a scalable, efficient, and accurate way.”

Through the Federal Jackets Fellowship program, Cotsman was able to spend the Fall 2024 semester as a legislative intern in Washington, D.C.

Why does it matter?

While Cotsman’s colleagues focus on the results of the project, she is more interested in the methodology. The prompts can be used for preference learning on any type of “unstructured data,” such as video or social media posts, especially those examining technology adoption for environmental issues. Asensio and the Data Science and Policy team use the technique in many of their recent projects.

“We can very quickly use GPT-4 to read through these things and pull out insights that are difficult to do with traditional coding,” Cotsman said. “Obviously, the results will be interesting on the electrification and carbon side. But what I’ve found so interesting is how we can use these emerging technologies as tools for better policymaking.”

While concerns over the speed of development of AI is justifiable, she said, Cotsman’s research experience at Georgia Tech has given her an optimistic view of the new technology.

“I’ve seen very quickly how, when used for good, these things will transform our world for the better. From the policy standpoint, we’re going to need a lot of regulation. But from the standpoint of academia and research, if we embrace these things and use them for good, I think the opportunities are endless for what we can do.”

Feb. 06, 2025

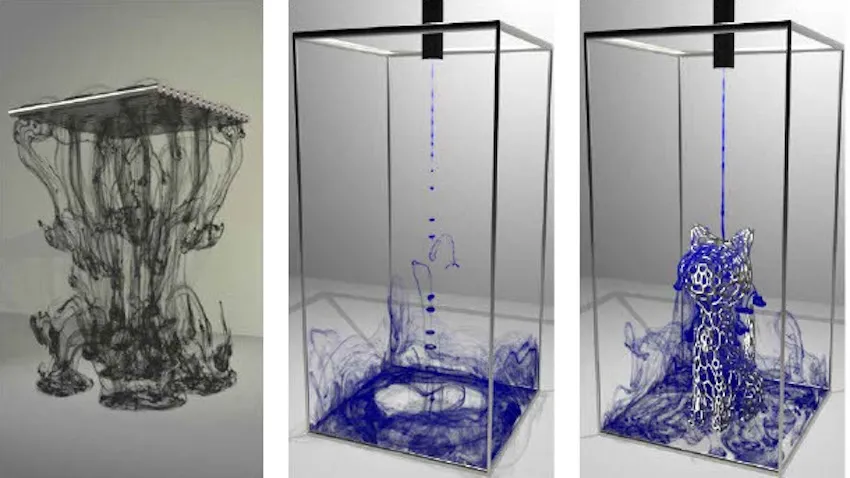

Calculating and visualizing a realistic trajectory of ink spreading through water has been a longstanding and enormous challenge for computer graphics and physics researchers.

When a drop of ink hits the water, it typically sinks forward, creating a tail before various ink streams branch off in different directions. The motion of the ink’s molecules upon mixing with water is seemingly random. This is because the motion is determined by the interaction of the water’s viscosity (thickness) and vorticity (how much it rotates at a given point).

“If the water is more viscous, there will be fewer branches. If the water is less viscous, it will have more branches,” said Zhiqi Li, a graduate computer science student.

Li is the lead author of Particle-Laden Fluid on Flow Maps, a best paper winner at the December 2024 ACM SIGGRAPH Asia conference. Assistant Professor Bo Zhu advises Li and is the co-author of six papers accepted to the conference.

Zhu said they must correctly calculate and simulate the interaction between viscosity and vorticity before they can accurately predict the ink trajectory.

“The ink branches generate based on the intricate interaction between the vorticities and the viscosity over time, which we simulated,” Zhu said. “Using a standard method to simulate the physics will cause most of the structures to fade quickly without being able to see any detailed hierarchies.”

Zhu added that researchers had yet to develop a method for this until he and his co-authors proposed a new way to solve the equation. Their breakthrough has unlocked the most accurate simulations of ink diffusion to date.

“Ink diffusion is one of the most visually striking examples of particle-laden flow,” Zhu said.

“We introduce a new viscosity model that solves for the interaction between vorticity and viscosity from a particle flow map perspective. This new simulation lets you map physical quantities from a certain time frame, allowing us to see particle trajectory.”

In computer simulations, flow is the digital visualization of a gas or liquid through a system. Users can simulate these liquids and gases through different scenarios and study pressure, velocity, and temperature.

A particle-laden flow depicts solid particles mixing within a continuous fluid phase, such as dust or water sediment. A flow map traces particle motion from the start point to the endpoint.

Duowen Chen, a computer science Ph.D. student also advised by Zhu and co-author of the paper, said previous efforts by researchers to simulate ink diffusion depended on guesswork. They either used limited traditional methods of calculations or artificial designs.

“They add in a noise model or an artificial model to create vortical motions, but our method does not require adding any artificial vortical components,” Chen said. “We have a better viscosity force calculation and vortical preservation, and the two give a better ink simulation.”

Zhu also won a best paper award at the 2023 SIGGRAPH Asia conference for his work explaining how neural network maps created through artificial intelligence (AI) could close the gaps of difficult-to-solve equations. In his new paper, he said it was essential to find a way to simulate ink diffusion accurately independent of AI.

“If we don’t have to train a large-scale neural network, then the computation time will be much faster, and we can reduce the computation and memory costs,” Zhu said. “The particle flow map representation can preserve those particle structures better than the neural network version, and they are a widely used data structure in traditional physics-based simulation.”

News Contact

Ben Snedeker, Communications Manager

Georgia Tech College of Computing

albert.snedeker@cc.gtaech.edu